In recent decades, the development of artificial intelligence has helped us to successfully bridge the differences that exist between humans and machines. Nowadays, machines are more often taking over some jobs that have so far been reserved for humans. However, this is not the end of development and research in the field of machine learning and artificial intelligence. This process is still going on. One of the newer advanced technologies is the technology of so-called deep learning – which is still being developed, all with the help of a special algorithm that we call the convolutional neural network.

Machine Learning And Convolutional Neural Network

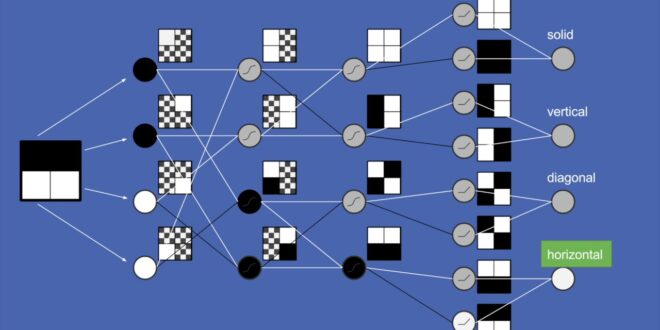

Deep machine learning has evolved over the decades and proved to be a very useful ally in handling large amounts of data. Along with that, techniques were developed – such as an image or audio recordings recognition techniques, etc. In the development of this technology and its techniques – the development of deep neural networks and especially convolutional neural networks has helped a lot. The enviable results of neural networks in numerous artificial intelligence tasks show that this is an area with extremely great potential. Whether it is the processing and analysis of sound, images, videos, text, or any other input – neural networks are increasingly at the forefront as the best choice of learning the algorithm. Convolutional neural networks are key to achieving superhuman results in image processing, and we will focus on them in this text.

A Brief History Of Neural Networks

Although neural networks in machine learning have flourished at the beginning of the 21st century – the idea of a computer-based neural network that works on the principle of the human mind appeared in the 1940s. Looking at the history of neural networks, we can differentiate two main periods. The first period is from the 1940s until the 1970s when the field of artificial neural networks subsided. The second period began in the 1980s and extends to the present day.

As a beginning of the modern period of development of neural networks – we take an article by McCulloch and Pitts from 1943. It shows that even the simplest neural networks can, in principle, compute arbitrary arithmetic or logic functions. The first successful neurocomputer called the Mark I Perceptron was developed in 1957 and 1958. Shortly afterward, the first network was developed and used to solve a practical problem. A network model called Madaline is the first neural network used in a flight control system – and has been in use for almost 50 years.

From Stagnation To The Renaissance

Numerous successes in this area have set extremely high expectations for neural networks – and there have been frequent predictions that computers capable of reading, writing, and speaking will appear in a few years. Such high expectations have turned the whole area into more experimental than practical – which has become a problem over time. The lack of good ideas has put this branch in a difficult position. This whole area of research stagnated until the 1970s. Then, although without great innovations and discoveries – the foundations were laid for a kind of renaissance of neural networks that we have today.

Principles Of Functioning Neural Network In Machine Learning

Neural network algorithms were created as an attempt to imitate the way the human brain learns – that is, the way the human brain classifies things. Computational operations performed by neural networks are demanding – so only relatively recent advances in technology have enabled their more intensive use. To imitate the work of the brain is a very demanding process – especially given the wide range of possibilities and amounts of data that the brain processes daily. There is a hypothesis that claims that all the processes that the brain performs are not made up of a large number of different programs – but of a single learning algorithm. This is just a hypothesis, but there is some scientific evidence to support its correctness. Moreover, there are special experiments – the so-called neural switching. Thanks to them, we understand that it is possible to have one learning algorithm that processes all possible “inputs”.

This knowledge has also been applied in machine learning where the goal is to find some approximation of ways to process different data.

If you want to learn more about convolution, how CNN works, and the steps used in this technique, check out some of the sites like serokell.io/blog/introduction-to-convolutional-neural-networks which will take you in detail, step by step step by step explain the essence of convolutional neural network functioning in machine learning.

Learning And Gradient Descent

When you learn how to build a convolutional neural network – you will also learn how to calculate the value and error of the network for certain input data. What remains is the problem of determining threshold values and weights for neurons. This process is called learning, and a key role in learning neural networks is played by a special optimization algorithm called gradient descent. Direct calculation of the gradient on the neural network is not an easy task. For the complete process, it is necessary to know some definitions and have a mathematical basis.

Some Applications Of Neural Networks

1. The Super-Resolution

The super-image resolution, that is, increasing image resolution, is an interesting problem often seen in crime series and movies. Although such results are not currently possible, the question is whether there is a way to generate the same image of a larger one’s dimension – without obvious loss of quality. Currently, numerical interpolations are most often used for this problem in practice, such as bilinear or bicubic interpolation. However, some studies show that the use of neural networks has great potential.

2. Face Recognition

Unlike the usual classification, the problem of face recognition implies a dynamic number of categories and this makes it much more difficult to solve. What today’s solutions do is generate certain vectors, such that for images of faces of the same persons, the distance of the descriptive vectors is small, while for different persons it is large. This helps a lot in tasks like face recognition.

The Bottom Line

In the end, we can say that although complicated and demanding – convolutional neural networks are certainly significant discoveries and a great help when it comes to areas such as computer vision or similar computer fields. Their significance is above all in the great power of recognizing the things that are necessary for data processing.

Comeau Computing Tech Magazine 2024

Comeau Computing Tech Magazine 2024